Problems

Managing secrets / sensitive entities is quite a troublesome yet challenging task. There’s a tremendous number of developers out there who are still storing plaintext passwords inside collaborating platforms such as GitLab or keeping credentials inside Docker images which is accessible by anyone. At Cốc Cốc, we’re using self-hosted Gitlab and Docker Harbor — locally opened — which helps mitigate the leak of secrets to the outside, it is, however, not a real solution to address this kind of problem.

Because the temptation to put secrets directly to git, or hardcode credentials in the source code is so huge, we procrastinated to restrict this practice at the beginning in the fast-paced development environment. Over time, some adopted tech choices such as Ansible and Kubernetes actually support a kind of secret management for their solutions, respectively called Ansible-vault or Kubernetes-secret, which help manage secrets easier. The story has not ended, another problem arose, the secrets are controlled in many places, even duplicated, and there’s no unified way for services to retrieve secrets. And how about the other services which are not managed by Ansible and Kubernetes?

When it looks too amateur to just leave it like that, we decided to make a Secret Management approach, where all the problems can be solved thoroughly and beautifully.

***

Design Goals

In the beginning stage, a set of goals is defined to guide architecture and development. Let’s review a few of these principles:

- No secret on public resources: the ultimate goal.

- Ease of use: the solution should be easily integrated with the current development workflow, including the Kubernetes ecosystem and traditional services running on a host.

- Centralized, simple yet full-featured: the solution must be centralized in controlling secrets, but needs to be highly available, and scalable. Moreover, it should support necessary features like secret retrieval/revocation, authentication/authorization…

***

Implementation

Tech choice: Hashicorp Vault — a simple secret management service, which supports key-value storage, token-based, as well as Kubernetes authentication… For the sake of simplicity, we don't introduce Vault and just list some of its features that are being used here.

Start Simple

In the beginning, we want to make a Vault service that simply works for our purpose: a central secret management place!

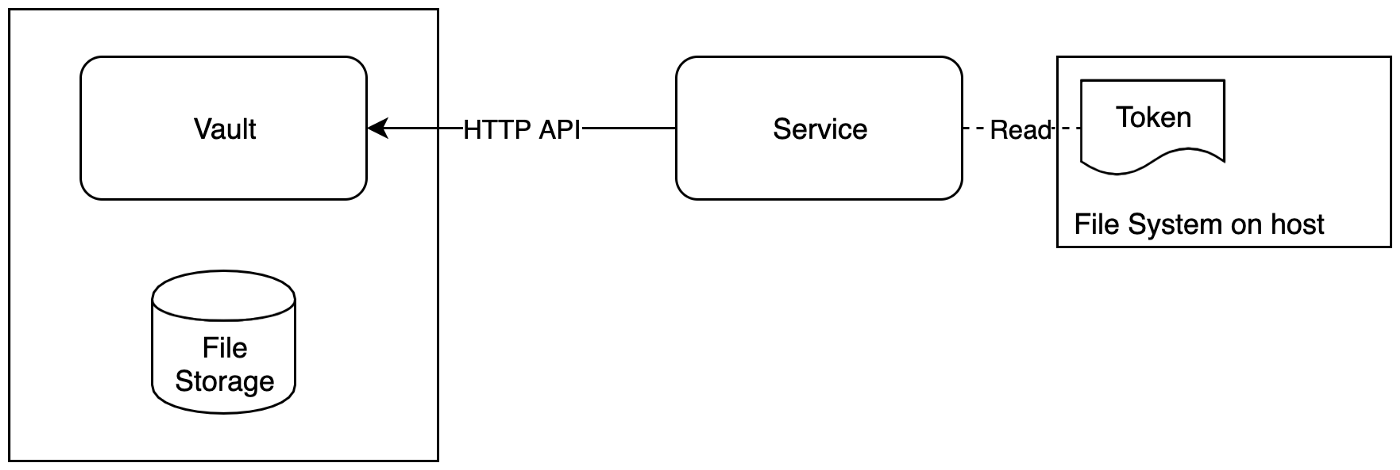

Simple Vault Implementation

A token is used for authentication and authorization in Vault. In this implementation, the token is stored as a file in the host filesystem, with proper permission. Services that need to retrieve secrets from Vault can read the token file.

Vault itself needs a storage backend for storing its data. Despite it supports many kinds of backends such as databases like MySQL or config storage like Zookeeper / Etcd, we decided to keep it in the filesystem for the ease of management, and to avoid the situation of deadlock— for example, Etcd needs secret from Vault to form a cluster, while Vault also needs to retrieve data from Etcd. For that matter, all the Vault’s data files in the file storage are encrypted.

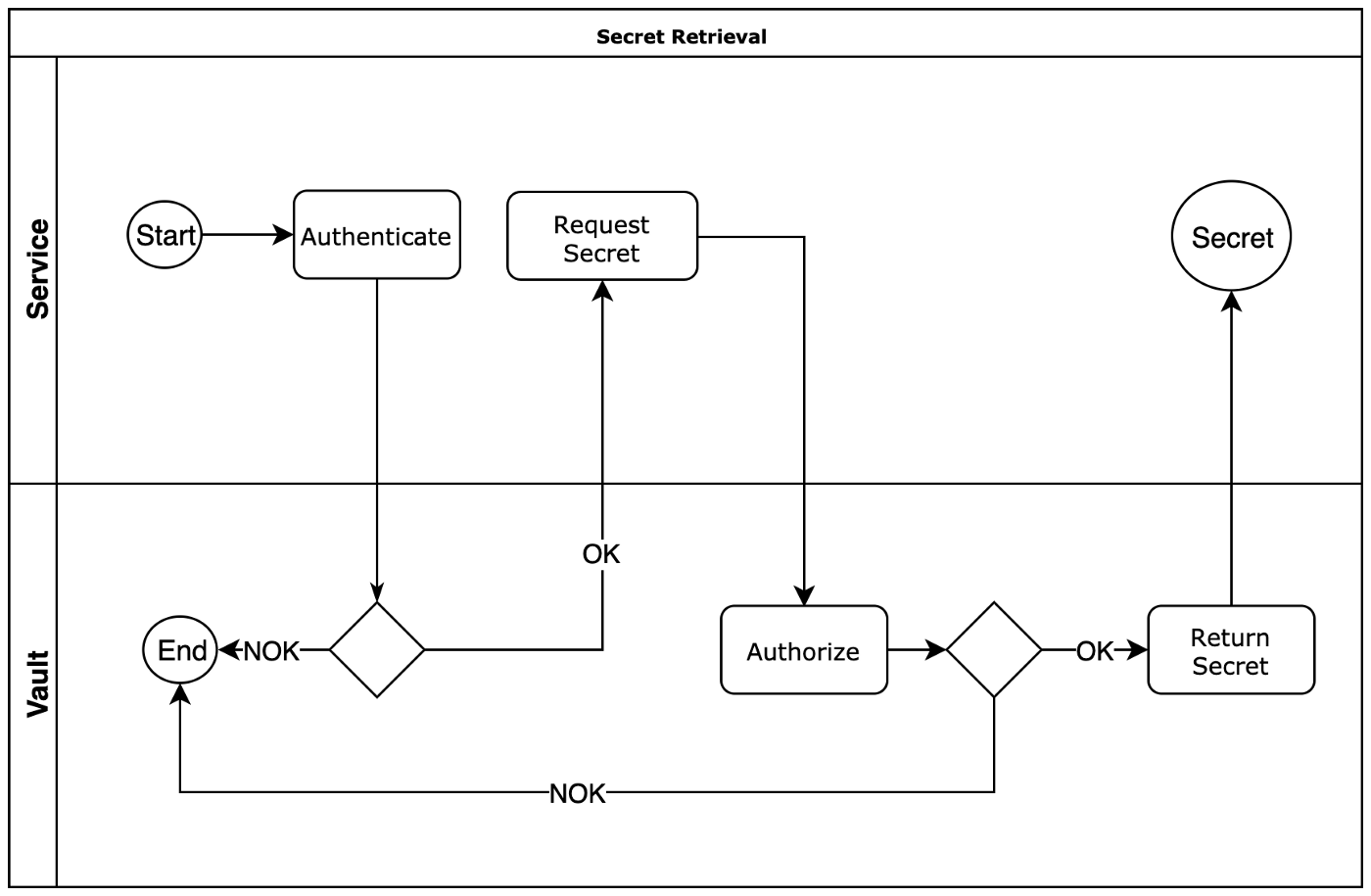

The workflow for secret retrieval looks like this:

Secret retrieval workflow

Sample secret retrieval procedure:

|

1 import hvac 2 import sys 3 4 VAULT_ADDR = "https://vault.itim.vn:8200" 5 VAULT_TOKEN_PATH = "/etc/coccoc/vault/token_admin" 6 7 class Vault(): 8 def __init__(self, url=VAULT_ADDR, token_path=VAULT_TOKEN_PATH): 9 with open(token_path, "r") as tp: 10 token = tp.read().strip() 11 self.vault = hvac.Client(url, token) 12 assert self.vault.is_authenticated() == True, "Unauthenticated!" 13 14 def read_secret(self, path, key): 15 secret = self.vault.read("secret/" + path) 16 return secret["data"][key] 17 18 vault = Vault() 19 print(vault.read_secret(sys.argv[1], sys.argv[2])) |

python2 sample secret retrieval

| # python vault.py admin/passwd/secret root-passwd Abcxyz |

By putting this Vault class into a universal Python library location in the system, the secret retrieval procedure can be integrated easily into current running services/scripts…

For this simple design, we solve the problem with traditional services, which can simply read the token file. But how about the containerized services? With this current approach, it needs either to mount the token into the container, or embedded the token file — a kind of secret — into the container image. Moreover, for the services to be deployed on many physical nodes, the token file must also exist on hosts, making it present in many places.

Into the Kubernetes world

As stated above, Kubernetes services go with different attributes than the traditional counterpart, and using a token-based approach doesn't seem to fit very well. Vault comes with another authentication method, especially for Kubernetes, which will be described below.

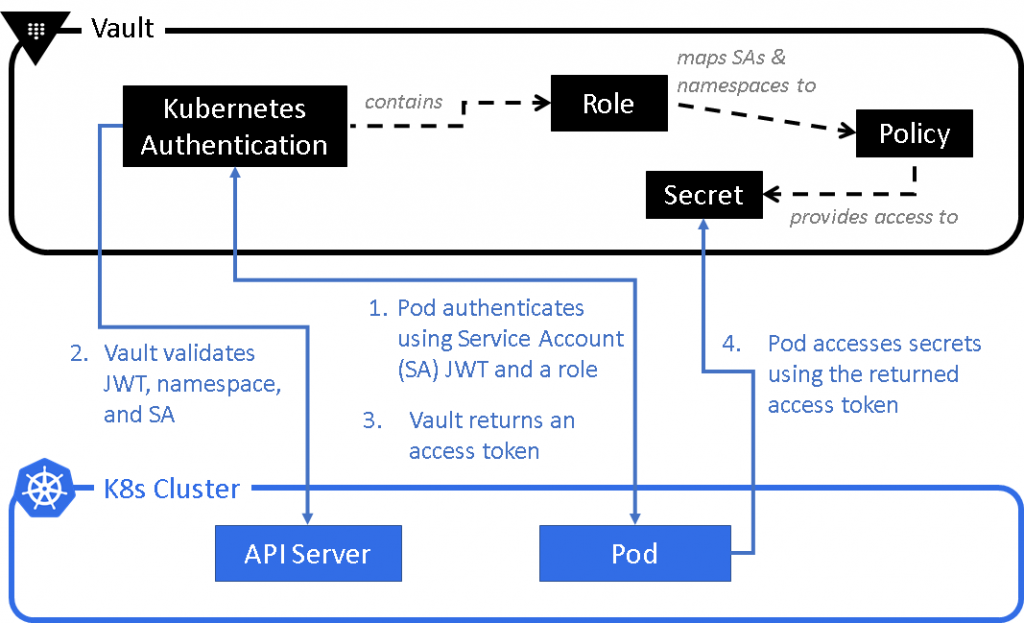

Vault — Kubernetes Auth workflow

The workflow can be explained simply like this (though the complete setup is not very straightforward):

- Pod uses the service-account-token (which is mounted by default into every pod) to ask for a Vault token which can be used to authenticate with Vault.

| Mounts: /var/run/secrets/kubernetes.io/serviceaccount from default-token-x27q4 (to) |

- This token is used for authentication with Vault Kubernetes-auth module.

- Vault then checks with the K8s API server, if the setup between Vault and K8s is appropriate, and the K8s account token is valid, Vault then sends back a temporary Vault token (which is similar to token-based authentication described earlier — but has a short TTL) for the pod to retrieve the secret.

In production, a special container is used to avoid all the manual work: vault-env. Simply it does all the steps above to get, and then returns the secret to an ENVironment variable, which then can be injected into the main container.

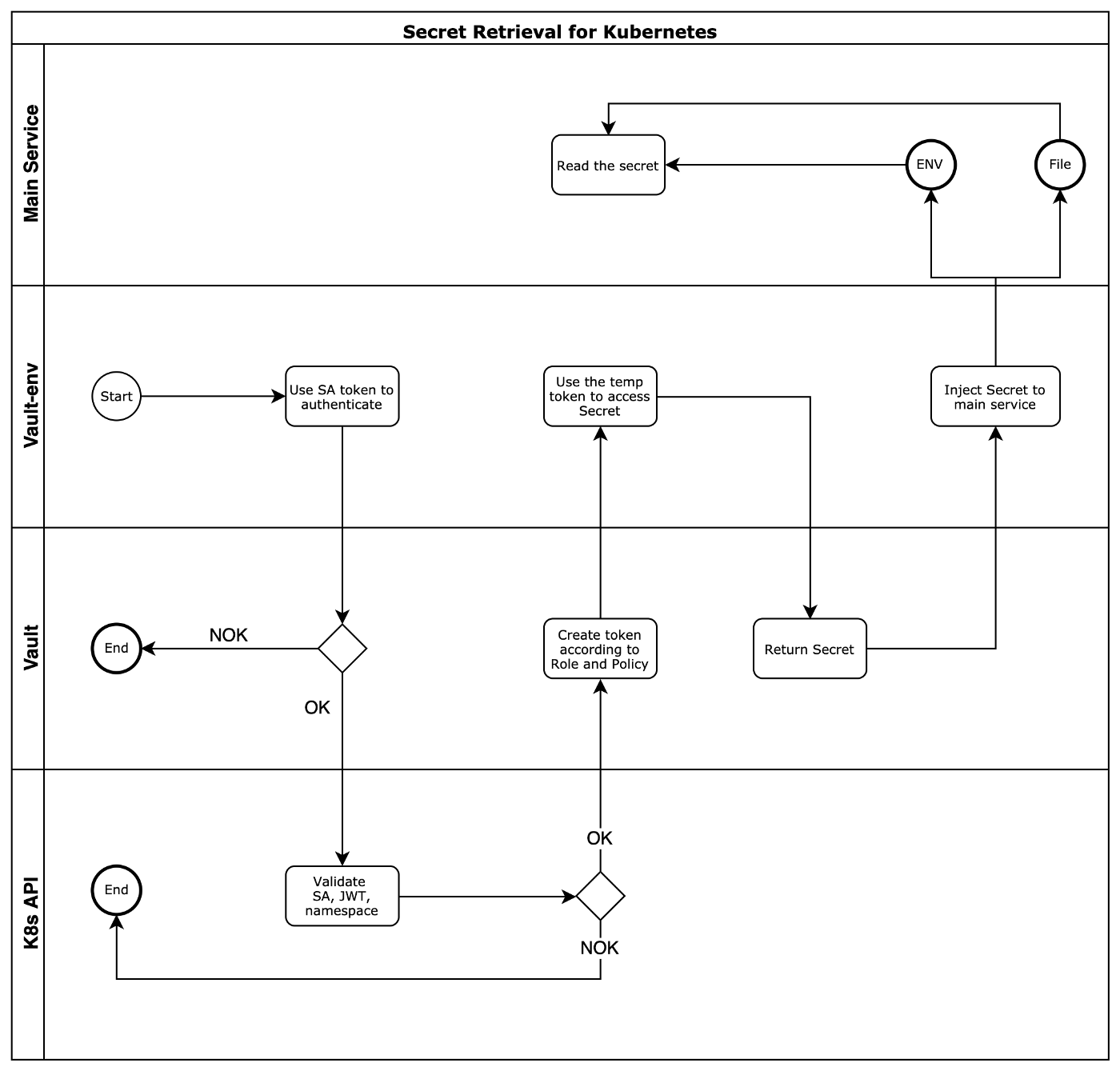

The secret retrieval workflow for Kubernetes services:

Kubernetes Secret retrieval workflow

As can be seen, the secret can be injected into the main service in 2 ways:

- File-based: Vault-env after receiving the secret writes it to an emptyDir which is shared between containers inside the pod. The main container then reads the file and get the secret.

- ENVvar-based: There’s not an easy way to pass an ENV from a container to another container inside a pod. We need to perform this trick: put vault-env binary before the real command of the main service, so ENV from vault-env can be passed to the main container.

For example, an SSL certificate and key are stored inside Vault, we need to get them and put them into Nginx container. The design will be like this:

- Vault-env will be the init container, which retrieves the secrets and put them into a shared emptyDir. Nginx then mounts the emptyDir to the appropriate location.

- SSL_KEY and SSL_CERT are ENV vars that will be transformed from Vault path into real secrets and then be written into files.

|

1 initContainers: 2 - name: credentials 3 image: vault-env:coccoc 4 env: 5 - name: VAULT_ADDR 6 value: "https://vault.itim.vn:8200" 7 - name: SSL_KEY 8 value: vault:secret/k8s/cert/coccoc_wildcard#key 9 - name: SSL_CERT 10 value: vault:secret/k8s/cert/coccoc_wildcard#cert 11 command: 12 - "/usr/local/bin/vault-env" 13 args: 14 - "/bin/sh" 15 - "-c" 16 - | 17 printenv SSL_KEY > /credentials/coccoc.com_wildcard.key; 18 printenv SSL_CERT > /credentials/coccoc.com_wildcard.crt; 19 volumeMounts: 20 - name: ssl 21 mountPath: /credentials/ 22 containers: 23 - name: nginx 24 image: nginx 25 volumeMounts: 26 - name: ssl 27 mountPath: /etc/nginx/ssl/ 28 volumes: 29 - name: ssl 30 emptyDir: {} |

Sample Kubernetes deployment with Vault-env

In the end, we’ve implemented a solution that works nicely with both traditional and modern Kubernetes services. Vault acts as a central Secret Management service, all the passwords / certificates / private keys… can be stored in one place and retrieved by the services which have valid tokens.

***

By Kubernetes, For Kubernetes

Vault gradually becomes more important to our infrastructure. Virtually all microservices running in the system require one ore more kinds of secrets, which make them undeployable when Vault is down.

High Availability

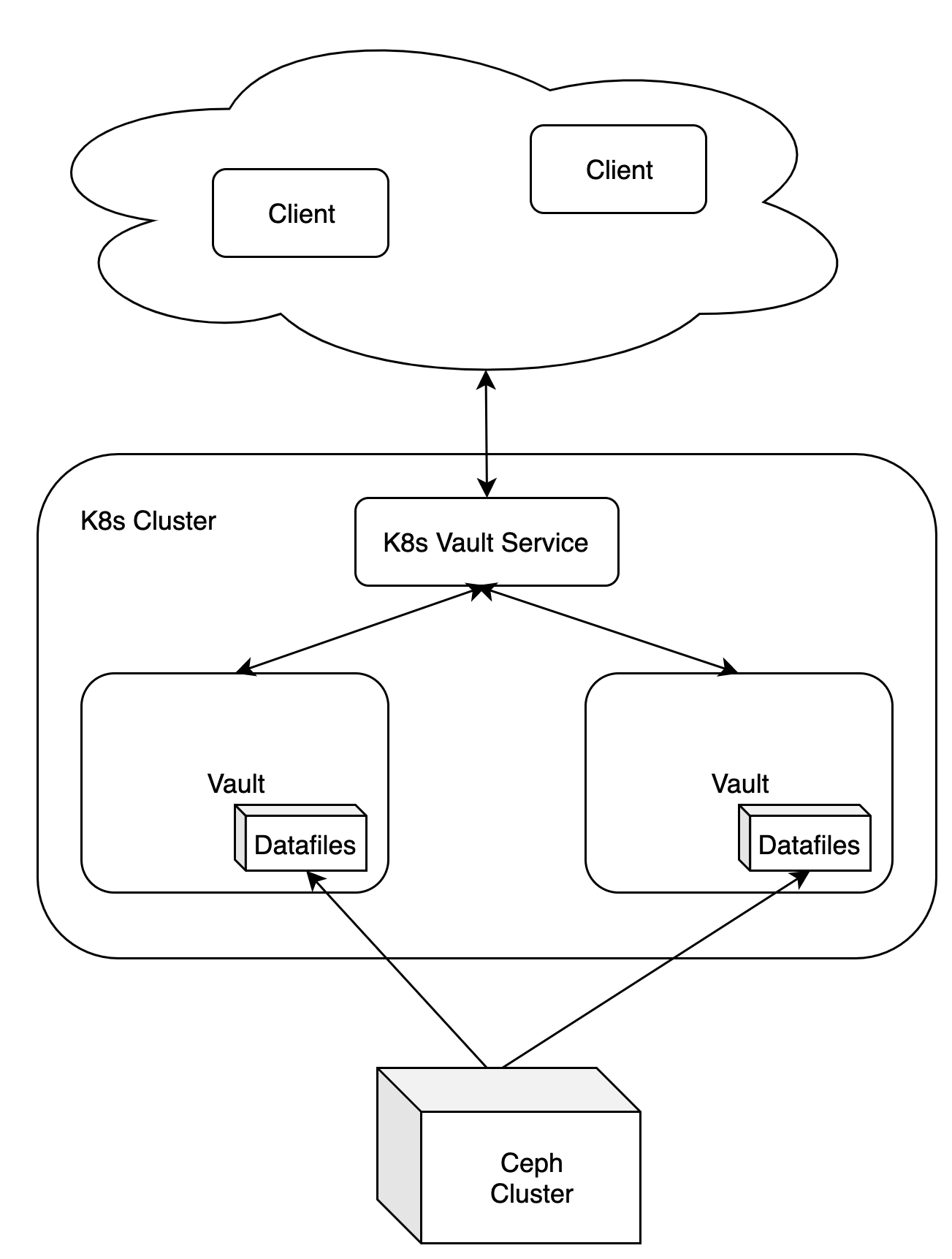

To cope with the high availability issue, we decided to deploy it into Kubernetes system, using CephFS as Vault datastore backend (although Vault has support for HA by itself when using clustered storage backends like Zookeeper/Etcd or Databases…).

Vault HA architecture

The architecture is simply an expansion of the simple design in the beginning. When deploying Vault in Kubernetes cluster, without a network filesystem solution, the pod can only be deployed into a host containing Vault data, and that ruins the advantage of Kubernetes — container orchestration.

The tech choice for the network filesystem solution is CephFS — which has been used in our infrastructure for a long time, while it’s stable, simple to set up, and most importantly, supported by Kubernetes. With the help of CephFS, Vault can be scaled to more than one pod, orchestrated by Kubernetes, and they’re all using the same datastore. Additionally, to make the two instances synchronized between each other the cache needs to be disabled by disable_cache — so when one instance updates the data, the other will re-read the storage and get the update.

On top of the Vault pods are the Kubernetes Service, which is service discovery, and automatically adds or removes pods’ IP when they become available / unavailable. Vault is exposed to the world by this Service.

Disclaimer: This HA setup is not recommended by Hashicorp Vault, as File Storage engine (even backed by shared CephFS) is not supported officially by Vault.

Auto Unseal

One more feature that Vault supports is Sealing. Every time the service started, it becomes sealed and can only be unsealed by manual work (or automatically using a very complex procedure with AWS — we don't use and cover it here). This feature is unfortunately not very suitable for the High Availability solution that we designed above, because when the Vault pod restart, the sysadmin’s action needs to be taken to unseal the service.

Basically, to unseal Vault, 3 out of 5 tokens need to be entered. With the help of Kubernetes, after Vault pod is completely up, we can start the unseal procedure automatically with postStart lifecycle:

|

1 lifecycle: 2 postStart: 3 exec: 4 command: 5 - "sh" 6 - "-c" 7 - >- 8 export PATH=/var/lib/vault/:$PATH; 9 export VAULT_ADDR="http://127.0.0.1:8200"; 10 RETRIES=3; 11 while sleep 3; do 12 vault status >/dev/null; 13 if [ $? -eq 2 ]; then 14 break; 15 fi; 16 RETRIES=$(($RETRIES - 1)); 17 if [ $RETRIES -eq 0 ]; then 18 echo "Vault is not ready for unseal, please check."; 19 exit 3; 20 fi; 21 done; 22 vault operator unseal $keyshareA; 23 vault operator unseal $keyshareB; 24 vault operator unseal $keyshareC; 25 vault status; 26 RET=$?; 27 if [ $RET -gt 0 ]; then 28 echo "unseal failed, please unseal by hand and check."; 29 exit $RET; 30 fi; |

***

Final Thoughts

There’re many other Secret Management solutions out there, each comes with pros and cons. The best thing about the design is by using Kubernetes and a simple service Hashicorp Vault, we can set up a quite complete architecture, which satisfies the pre-defined requirements. There are still works ahead with Secret, but the current solution helps us to solve the problem of exposing credentials, making the infrastructure more secure, and looking a lot more professional!